Congress wants to regulate AI. Big Tech is eager to help

Members of Congress want to regulate artificial intelligence, and Big Tech is watching — and lobbying.

Senate Majority Leader Charles E. Schumer (D-N.Y.) launched a major push on AI regulation late last month, promising his colleagues hearings and a series of “AI insight forums” that will bring top AI experts to Washington and eventually lead to the creation of draft legislation.

Over the next several months, members of Congress — only a few of whom have any technical expertise — will have to choose whether to embrace a strict regulatory framework for AI or a system that defers more to tech interests. Democratic and Republican lawmakers will have to grapple with the daunting task of learning about rapidly developing technology, and with the fact that even experts disagree about what AI regulations should look like.

California’s Silicon Valley-area members of Congress, including Reps. Zoe Lofgren (D-San Jose), Ro Khanna (D-Fremont) and Anna G. Eshoo (D-Menlo Park), are caught in a particular jam. All three are Democrats and support the idea of regulating tech companies.

But those companies are the economic engines of the trio’s districts, and many of their constituents work in the industry. Move too slowly, and they could alienate their party’s national base — especially unions that worry about AI eliminating jobs. Move too quickly, and they could damage their standing at home — and make powerful enemies in the process.

Technology interests, especially OpenAI, the nonprofit (with a subsidiary for-profit corporation) that created ChatGPT, have gone on the offensive in Washington, arguing for regulations that will prevent the technology from posing an existential threat to humanity. They’ve engaged in a lobbying spree: According to an analysis by OpenSecrets, which tracks money in politics, 123 companies, universities and trade associations spent a collective $94 million lobbying the federal government on issues including AI in the first quarter of 2023.

Sam Altman, OpenAI’s 38-year-old chief executive, has met with at least 100 lawmakers in Washington recently, and OpenAI is on the hunt for a chief congressional lobbyist.

OpenAI’s media office did not respond to a request for comment regarding the company’s position on federal regulation of AI. But in written testimony to the Senate Judiciary Committee, Altman wrote that AI regulation “is essential,” and that OpenAI is “eager to help policymakers determine how to facilitate regulation that balances incentivizing safety while ensuring people are able to access the technology’s benefits.”

He suggested broad ideas: AI companies should adhere to “an appropriate set of safety requirements,” which could entail a government-run licensing or registration system. OpenAI is “actively engaging with policymakers around the world to help them understand our tools and discuss regulatory options,” he testified.

Members of Congress should cultivate a healthy skepticism of what they hear about AI regulation from tech interests, said Marietje Schaake, an international policy fellow at Stanford’s Institute for Human-Centered Artificial Intelligence and former member of the European parliament. “Any and all suggestions that are coming from key stakeholders, like companies, should be seen through the lens of: What will it mean for their profits?” she said. “What will it mean for their bottom line? The way things are framed reveals the interest of the messenger.”

Schaake expressed concern that when Altman and others warn of existential threats from AI, they are putting the regulatory focus on the horizon, rather than in the present. If lawmakers are worrying about AI ending humanity, they’re overlooking the more immediate, less dramatic worries.

Big Tech power players currently have “a fetish for regulation,” which “can’t help but strike a number of people in Washington as a thinly veiled attempt to absolve themselves of the social consequences of what they’re doing,” a source familiar with AI firms’ congressional engagement, who requested anonymity to speak candidly about private conversations, told The Times. “There is, unfortunately, a dangerous dynamic at play between members of Congress who will rely on technical experts.”

The source’s conversations with Washington insiders revealed a deference to Altman.

“I’ve had multiple senior individuals in Washington, people who are or listening to members of Congress, say something to the effect of, ‘Well, Sam seems like a good guy. And he certainly came off well in the hearing so maybe we just let it play out.’”

Publicly, members of Congress say they will write laws independent of tech interests. In hearing rooms across the Capitol, lawmakers are still the questioners, and industry experts are the questioned. Lofgren, the ranking member on the House Science, Space and Technology Committee, told The Times that tech interests have not lobbied her on the scope of AI regulations.

“I will say this: I have not been lobbied by any tech company on what to do,” Lofgren said. “And I haven’t heard of any other member of Congress who’s been lobbied on that.”

Robin Swanson, an advocate for tech regulation who has managed campaigns for statewide privacy laws, praised Lofgren’s fellow Silicon Valley representatives Eshoo and Khanna for their proactive policy positions on the issue.

Schumer emphasized that he doesn’t want tech companies drafting the rules.

“Individuals in the private sector can’t do the work of protecting our country,” Schumer said in a speech announcing his vision for the Senate’s approach to AI. “Even if many developers have good intentions there will always be rogue actors, unscrupulous companies, foreign adversaries that will seek to harm us. Companies may not be willing to insert guardrails on their own, certainly not if their competitors won’t be forced to do so. That is why we’re here today. I believe Congress must join the AI revolution.”

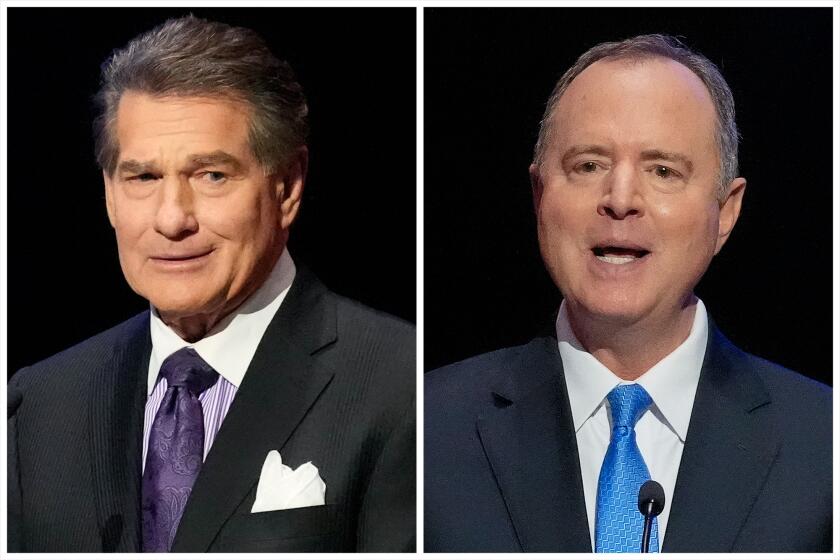

Democrat Lexi Reese, a Silicon Valley executive, announced Thursday that she is running for the U.S. Senate seat being vacated by retiring Sen. Dianne Feinstein.

But technology interests tend to employ skillful lobbyists who can influence the effects of a policy with minor edits, Swanson said. When she was working on behalf of the California Consumer Privacy Act, interests from the tech sector asked for a change in just seven words across the lengthy, complex legislation.

That seven-word edit, Swanson said, would have defeated the act’s purpose.

“They certainly have very smart and wily folks on their side who know the technology well enough to know where to hide the goods,” she told The Times. “So we have to have equally as adept people who genuinely care about privacy and guardrails on our side.”

Lofgren didn’t seem worried. She believes that her colleagues need a firmer understanding of the technology before sitting down to write policy. She has advocated for patience in the process.

“I don’t think we’re in a position to know what to do,” Lofgren said. “And I do think it’s important to have a clue about what to do before we do it. This technology is moving at a rapid pace. So we don’t have endless time. But we have enough time to try and figure out what we’re doing before we rush off and potentially do something stupid.”

Congress will take the lead in regulating AI, she insisted.

Increasingly concerned about powerful AI systems, regulators say they’re directing resources toward identifying negative effects on consumers and workers.

“It’s our responsibility to write whatever it is we’re going to write,” Lofgren said.

Congressional offices themselves appear worried about AI technology. The House is imposing new guardrails on how its employees use large language models such as ChatGPT. Offices are only allowed to use ChatGPT Plus, which is a paid, premium version that includes additional privacy features. Axios reported the new guidelines last week.

Khanna, who leans into his persona as “the Silicon Valley congressman,” told The Times in a statement that lawmakers should work with AI ethicists in his district to ensure that safe legislation is produced.

“The startups in my district are at the forefront of groundbreaking AI research and development, tackling complex challenges, and making remarkable strides towards improving lives and preserving our planet,” Khanna said. “Congress must craft smart legislation to regulate the ethics and safety of AI that won’t stifle innovation.”

By racing to regulate AI, lawmakers could miss the opportunity to address some of the technology’s less obvious dangers. Machine learning algorithms are often biased, explained Eric Rice, who founded USC’s Center for AI in Society. Several years ago, researchers found that a popular healthcare risk-prediction algorithm was racially biased, with Black patients receiving lower risk scores.

In their conversations about AI, Rice said, lawmakers should consider how the technology could affect equity and fairness.

“We want to ensure that we’re not using AI systems that are disadvantaging people who are Black or that are disadvantaging women or disadvantaging people from rural communities,” Rice added. “I think that’s a piece of the regulation puzzle.”

As technology companies rapidly innovate, Congress is moving slowly on just about everything. The Senate is voting less often than it has in the past and taking a long time to do so. In the House, the hard-right Freedom Caucus has weakened Republicans’ hold on the order and direction of the legislative process.

Analysts aren’t sure whether political polarization within Congress or among Americans will hurt progress on AI discussions. The debate doesn’t appear particularly partisan yet, in part because most people don’t know where they fall on it: A Morning Consult poll says that 10% of Americans think generative AI output is “very trustworthy,” 11% think it’s “not at all trustworthy,” and 80% aren’t decided. In addition, lawmakers appear to be moving forward in a bipartisan manner; Democratic Rep. Ted Lieu of Torrance and Republican Rep. Ken Buck of Colorado have introduced a bill alongside Eshoo that would establish a National Commission on Artificial Intelligence.

President Biden has his own stake in protecting Americans from the potential harms of AI. As a self-professed pro-union president, Biden must answer to unions across the country that worry that the technology could eliminate workers’ jobs. White House officials on Monday met with union leaders to discuss this issue, concluding that “government and employers need to collaborate with unions to fully understand the risks for workers and how to effectively mitigate potential harms,” according to a White House news release.

Both Democrats and Republicans support regulations that would require companies to label AI creations as such, Morning Consult polling has found. They also agree on banning AI in political advertisements. Overall, 57% of Democrats and 50% of Republicans think that AI technology development should be “heavily regulated” by the government.

But “regulation” on its own is a meaningless word, Schaake noted. Some regulations intervene in the market while others facilitate the market; some regulations benefit large companies and others harm them.

Authorities worldwide are racing to rein in artificial intelligence, including in Europe, where groundbreaking legislation is being pushed through.

“To speak about being in favor or against regulation essentially does not tell us anything, because regulation — and this is something that senators above all should be very much aware of — can take you anywhere,” Schaake said.

Now that they agree on the need for regulation, lawmakers will have to agree on the details. Most proposals coming out of Congress are abstract: Lawmakers want to establish commissions and pay for studies. When it comes to actually writing a regulation, they’ll have to get more specific: Do they want to, as Altman suggests, establish a licensing regime? Will they strengthen data privacy laws to restrict what algorithms can train on?

“Congress is slow,” Swanson said. “And, Congress has potential to fall victim to the wolf in sheep’s clothing.” Because of this, she added, lawmakers need to be transparent about their policymaking process.

Schumer’s Senate panels are scheduled to begin meeting in September, and a bill won’t be passed in the chamber until after that. Even then, political disagreements could hinder the lawmaking process.

Meanwhile, the generative AI software market is expected to grow tenfold in the next five years.

More to Read

Get the L.A. Times Politics newsletter

Deeply reported insights into legislation, politics and policy from Sacramento, Washington and beyond. In your inbox three times per week.

You may occasionally receive promotional content from the Los Angeles Times.